Building an Interactive 3D Hero Animation

I wanted to add a nice visual effect to the hero section of my website, and because I have worked quite a bit with three.js for my project Pausly, I thought it would be a great opportunity to showcase my browser rendering skills.

I tried to keep it simple, but I couldn’t resist diving into details and fine-tuning performance. Optimizing every aspect of this animation turned out to be a really fun challenge!

This post describes my process of building this animation from start to finish, including all the optimizations I made. Check out the landing page of this blog to see what it looks like and how it interacts with your cursor.

The Concept

The first step was finding a concept. I knew I wanted something in 3D, so I started experimenting with three.js and went through examples online to find the direction I wanted to take. My goal was something fairly abstract, and particle animations immediately caught my eye.

The animation needed to fit in the hero banner, so I began with a simple rotating sphere. It was a start, but it wasn’t very exciting. Then I experimented with cursor interactivity and quickly landed on a force-field effect, where particles near the cursor get attracted and stick to it.

Particle Animations

This post isn’t meant to be a tutorial on particle animation, but I'll briefly cover the core concepts.

Create a THREE.WebGLRenderer() and append the canvas element to the body. Then

create the particles as THREE.Points and add them to the scene. Each point has

a position, which is a new THREE.Vector3(x, y, z), and a material:

new THREE.PointsMaterial({ color: 0xffffff }). You then create an animate

function that updates the particle positions for each frame. The browser’s

requestAnimationFrame() is used to update and render the particles in each

frame.

Replacing the Sphere with Shapes

Once I had the basic effect, I decided that a simple sphere wouldn’t cut it. I wanted something more dynamic, so I decided to implement multiple shapes and have them fade seamlessly into one another. The question was: how do I render different objects? A sphere is easy because it’s based on a simple mathematical equation, but more complex shapes are trickier.

First Iteration: Simple Polygons

The first iteration used simple polygons extruded in 3D. The polygons were

defined as points in an array, and I converted them to triangles using the

poly2tri library.

This worked well. The shape data was small, and converting polygons into

triangles and extruding them wasn't too expensive. I built shapes like slashes

(/), Xs, and Ys. But whenever I showed someone, their first question was

always:

That looks cool. What do the symbols mean?

I quickly realized I should display more complex, meaningful shapes.

Second Iteration: Exporting Points from Blender

Since I wanted custom shapes, I turned to Blender. Modeling simple objects that can be expressed as particles is easy enough, but the challenge is to export them efficiently for use on the landing page without consuming too many resources.

The obvious approach is to export a .gltf file and load it with

THREE.GLTFLoader and then somehow distribute the particles on the surface of

the object. But the more I thought about it, the more this approach felt like

overkill.

I quickly concluded that I should distribute the particles in Blender and simply export the points. This way, I have full control over how the points are distributed, and I don’t have to do too much work in the browser (which would use up resources and increase startup time on the client).

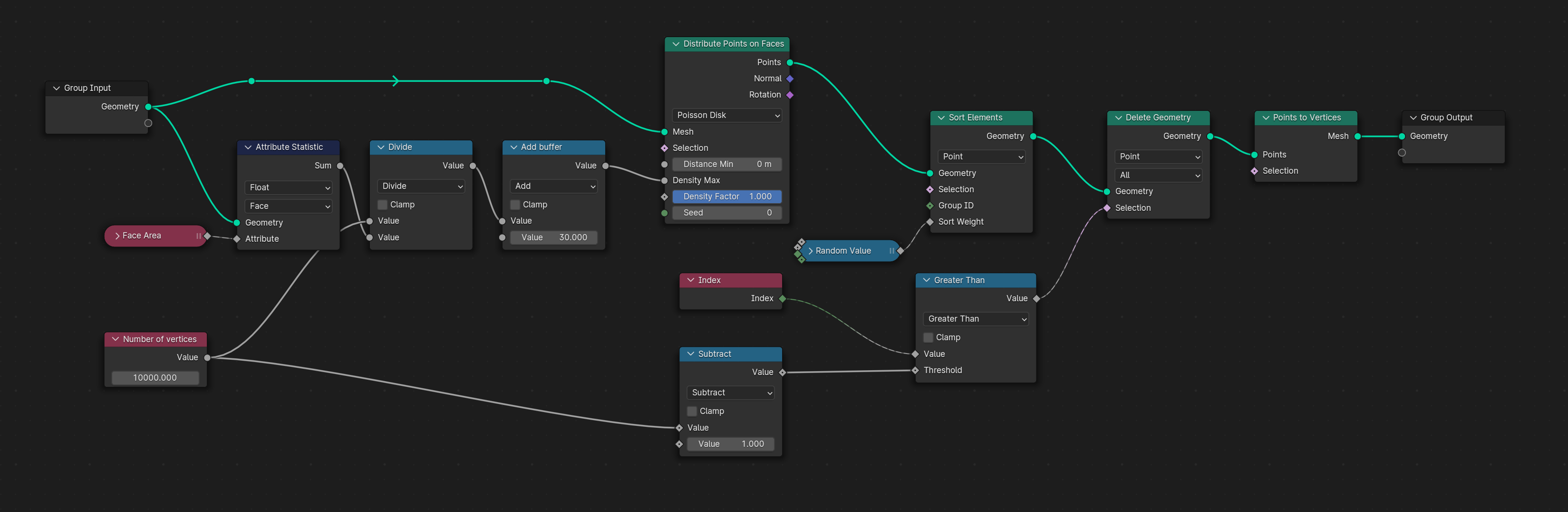

Distributing Points in Blender

Distributing points on objects in Blender has become quite easy. In the past,

you needed a hair system, but now you can simply use geometry nodes! You add

a geometry nodes modifier, and within it, use the Distribute Points on Faces

node. Since I targeted exactly 10,000 points for each object, this became a

bit more complicated, as the node only provides a Density parameter.

Fortunately, calculating the density is just a matter of dividing the desired

number of points by the surface area of the object and plugging that value into

the Density parameter. I noticed this approach was sometimes off by a few

points, so I added a small “buffer” to the particle count and later removed any

excess points using an additional node.

I also realized that simply removing points by index isn’t ideal because the

Distribute Points on Faces node doesn’t place points in a random order. If you

remove the last points added, parts of your mesh can disappear unevenly. To

avoid this, I sorted the points randomly before removing any.

Here’s the full geometry nodes setup:

Exporting the Points

The next step was to get the points into a format suitable for my landing page. At this stage, I was already focused on performance, so I had two main criteria:

- The files should be as small as possible.

- The browser shouldn’t have to do much work to load the points.

The most obvious approach would be to export the points as a JSON file. Each point consists of three values (x, y, z), so the JSON might look something like this:

[

[0.1421, 0.24883, 1.3235],

[0.8932, -0.4721, 0.9145],

[0.3347, 1.2856, -0.5629]

// etc...

]While this format isn’t optimized, gzip compression would make the file size manageable. The real drawback is that the browser would need to parse the entire JSON, which isn’t very efficient.

The simplest way to achieve a more performant solution was to export the points as a binary file. So, I wrote a simple Blender Python script that iterates over all points and writes each position to a binary file as Float32 values. Since each point takes up 4 bytes and each has 3 values, parsing it is straightforward.

Here’s the relevant part of the script:

file_path = f"{obj.name}.dat"

# The 'b' flag is for binary

with open(file_path, 'wb') as f:

for vert in eval_mesh.vertices:

# Pack each coordinate as Float32 binary

# and write them in sequence

f.write(struct.pack('f', vert.co.x)) # 'f' is for Float32

f.write(struct.pack('f', vert.co.z))

f.write(struct.pack('f', -vert.co.y))Now we have a binary .dat file for each object that we can load in the

browser. Nice!

Removing the three.js Dependency

At this point, I decided to remove three.js. Using it to render particles on a

WebGL canvas was just overkill. The library is quite large and includes many

features that were completely unnecessary for this project.

I also wanted to load the particles (which I had exported to a Float32 array) as

efficiently as possible, and I wasn't confident that three.js would allow me

to achieve this level of optimization.

Here's a brief overview of how to create a particle animation in WebGL without any external libraries:

- Create a WebGL context.

- Provide a vertex and fragment shader to render particles on the GPU.

- Provide the positions of the particles to the program.

- Write a function that updates the particle positions and reflects them in

each frame using

requestAnimationFrame.

Here’s a simple pseudo-code example:

const canvas = document.querySelector('canvas')

const gl = canvas.getContext('webgl', { alpha: true, antialias: true })

const vertexShader = compileShader(gl.VERTEX_SHADER, vertexShaderSource)

const fragmentShader = compileShader(gl.FRAGMENT_SHADER, fragmentShaderSource)

// Create the actual particles.

// This will need be replaced by the actual data from our binary files.

const positions = new Float32Array(PARTICLE_COUNT * 3)

const positionBuffer = gl.createBuffer()

gl.bindBuffer(gl.ARRAY_BUFFER, positionBuffer)

gl.bufferData(gl.ARRAY_BUFFER, positions, gl.DYNAMIC_DRAW)

const updateParticles = () => {

for (let i = 0; i < PARTICLE_COUNT; i++) {

const index = i * 3

// In this loop all the calculation is done to get the new

// positions of all particles.

const newPosition = [

/* ... */

]

positions[index + 0] = newPosition[0]

positions[index + 1] = newPosition[1]

positions[index + 2] = newPosition[2]

}

}

const animate = () => {

updateParticles()

// Update particle positions

gl.bindBuffer(gl.ARRAY_BUFFER, positionBuffer)

gl.bufferData(gl.ARRAY_BUFFER, positions, gl.DYNAMIC_DRAW)

gl.clear(gl.COLOR_BUFFER_BIT | gl.DEPTH_BUFFER_BIT)

gl.drawArrays(gl.POINTS, 0, PARTICLE_COUNT)

requestAnimationFrame(animate)

}

animate()Working with WebGL directly, without a library, requires a bit more initial setup, but Mozilla's WebGL documentation is very comprehensive. Writing the shaders is somewhat more involved (I wanted the particles to fade out when they're further back and create a colorful gradient), but it's not overly complex.

Loading the Binary Files

So now we have the binary .dat files and a WebGL scene. How do we get the

.dat files into our Float32Array? Luckily for me, that’s pretty easy. A

Float32Array

is actually a subclass of

TypedArray,

and a TypedArray is just a view on a binary data buffer! So we just need to

create this TypedArray with the contents of our binary file, and we’re good to

go. The code for that couldn’t be simpler:

const url = `/points/${shape}.dat`

// Fetch the binary file from the provided URL

const response = await fetch(url)

// Check if the response is successful

if (!response.ok) {

throw new Error(`HTTP error! Status: ${response.status}`)

}

// Read the response as an ArrayBuffer

const arrayBuffer = await response.arrayBuffer()

const positions = new Float32Array(arrayBuffer) As you can see, the fetch() function and the Float32Array play nicely

together. You can simply pass the arrayBuffer from the response to the

Float32Array constructor.

It doesn’t get more efficient than this. The server sends the data as a

Float32 array buffer, which the client can then directly load into memory

without any decoding. Nice!

Further Optimizations

Now I had a working WebGL scene with particles that loads efficiently without the browser having to do any initial computation. But I wanted to take things a bit further.

WebGL is great because it offloads all the heavy lifting to the GPU. But JavaScript still runs on the CPU for all the particle calculations — even worse: on the main JavaScript thread. The calculations are fairly simple, but doing them for 10,000 particles is still a lot of work. It runs smoothly on my machine (and most machines I’ve tested it on), but I wanted to make sure that even slower machines wouldn’t get a laggy UI. I don’t mind as much if the animation can’t run at 60fps on older machines, but the UI should still be fully responsive.

In JavaScript, if you want to offload computation from the main thread, web workers are the tool to use. They allow scripts to run in a background thread that does not block the execution of the main thread.

Moving the Computation to a Web Worker

Although I later realized that there was a simpler way, I’ll still describe my first approach here because there are some interesting takeaways. If you're only interested in the final solution, you can skip to the next section.

To move the computation to a web worker, you need to create a new worker and the script to go along with it. You can then send data to and receive data from the worker. Since the worker runs in a separate thread, you can’t directly access variables from the other context. Here’s what a simple web worker implementation would look like:

// Main thread

const myWorker = new Worker('worker.js')

myWorker.postMessage({ positions })

myWorker.onmessage = (e) => {

console.log('New positions: ', e.data)

}

// Web worker (worker.js)

self.onmessage = ({ data }) => {

const positions = data.positions

const newPositions = calculateNewPositions(positions)

self.postMessage({ positions: newPositions })

}However, there is one problem with this approach: because the worker runs in a

different thread, sending data to it with postMessage() copies the data.

If the data were shared, both threads would be able to write and read from it at

the same time, which wouldn’t be safe. But transferring 3x 10,000 Float32 data

means the animation would copy 120kB of data in memory for each frame just

to send the data to the worker. Then the data would need to be sent back,

totaling 240kB.

The way to avoid this is to use Transferable Objects. In short, there are some JavaScript objects that can be transferred between threads without copying. The memory block for these objects simply stays intact, and ownership is transferred to the other thread. The original object can no longer access the underlying memory, so trying to access it will result in an error.

Here are the transferable objects: ArrayBuffer, MessagePort,

ReadableStream, WritableStream, TransformStream,

WebTransportReceiveStream, WebTransportSendStream, AudioData,

ImageBitmap, VideoFrame, OffscreenCanvas, RTCDataChannel,

MediaSourceHandle, and MIDIAccess. Since Float32Array is a subclass of

ArrayBuffer, it can also be transferred.

The syntax for transferring an object to another thread is a bit strange, but simple:

const positions = new Float32Array(PARTICLE_COUNT * 3)

worker.postMessage(positions, [positions.buffer])

// This would throw an error now:

// console.log(positions);The important part is the second argument to postMessage:

[positions.buffer], a list of transferable objects to be transferred.

This way, I could simply "move" the data from the main thread to the worker thread, compute it there, and then, when done, transfer the data back to the main thread to update my WebGL context and draw the new positions. No unnecessary memory copies, and the performance immediately improved.

After implementing this, I realized there was a simpler way. Maybe you noticed

it when I listed the Transferable Objects: along with ArrayBuffer was

OffscreenCanvas, which made me realize I could simplify everything.

Moving the Canvas to a Web Worker

Instead of setting up the whole animation and running it on the main thread, and only offloading the particle position computations to a web worker, I could just move the entire canvas to a web worker and do everything there.

So I rewrote my implementation, which made the code a lot simpler. Instead of

sending the Float32Array back and forth in the animate function (and having

to carefully avoid accessing or modifying the positions array when it had been

transferred to the worker), I could simply send the OffscreenCanvas to the web

worker on startup and set up the entire scene there:

const canvas = document.querySelector('canvas')

const offscreenCanvas = canvas.transferControlToOffscreen()

worker?.postMessage(offscreenCanvas, [offscreenCanvas]) No more data transfer back and forth — just a single call at the start of the animation, and then the web worker can handle everything.

Final Words

My main takeaway was that it’s entirely feasible to create an animation from

scratch with WebGL in around 300 lines of code, avoiding the added weight of

importing three.js.

This project also showed me that even for simple 2D canvas renders, if the computation becomes a bit more intensive (like downsizing images, for example), it’s easy to simply transfer the canvas to a web worker and do the computation there.

I hope this was an interesting read and that it inspires you to create something yourself.

Need a Break?

I built Pausly to help people like us step away from the screen for just a few minutes and move in ways that refresh both body and mind. Whether you’re coding, designing, or writing, a quick break can make all the difference.

Give it a try